You're reading for free via Rajan Sahu's Friend Link. Become a member to access the best of Medium.

Member-only story

Supercharging Your Fastapi Application with Celery and RabbitMQ.

My article is for everyone! Non-members can click on this link and jump straight into the full text!!

When you need to perform heavy or time-consuming tasks like sending emails, processing large files, or crunching numbers, you might want something more robust to handle these tasks in the background. This is where Celery comes into play. Paired with RabbitMQ, Celery can transform your FastAPI application into a powerhouse that efficiently handles background tasks.

This article will explore using Celery with RabbitMQ in a FastAPI application. We’ll explore why and when to use Celery, compare it with FastAPI’s built-in background tasks, and explain why RabbitMQ is our broker.

Before starting let's understand Why we need Celery?

Celery is an asynchronous task queue system that allows you to run tasks in the background. It’s useful as mentioned below:

- Time-Consuming Tasks: Tasks that take a long time to complete, like sending bulk emails, or tasks that are not important like sending a welcome message.

- Repetitive Tasks: like scheduled jobs, are managed efficiently by Celery.

- Error Handling: Celery provides robust error handling and retry mechanisms essential for production-grade applications.

But the question remains: why do we need Celery when FastAPI Background Tasks are available?

Yes! FastAPI has a built-in background tasks feature, which is great for simple use cases. However, it has its limitations:

- Limited Scalability: FastAPI background tasks are tied to the lifespan of the API instance, making them less suitable for long-running or highly scalable systems.

- No Distributed Task Processing: FastAPI background tasks run within the same process as your FastAPI application, meaning they can’t be distributed across multiple machines.

- No Result Backend: FastAPI doesn’t provide a way to store the results of background tasks for later retrieval.

Celery Advantages:

- Scalability: Celery can scale to multiple worker nodes, each capable of processing tasks independently.

- Distributed Processing: Celery can distribute tasks across multiple servers, allowing for high availability and load balancing.

- Result Backend: Celery provides a result backend where you can store and retrieve task results, making it more versatile.

Enough theory — let’s dive straight into the implementation.

Before starting the implementation, we need a broker to facilitate communication between our application and Celery. For this, we will use RabbitMQ, but I know you’re probably wondering why we chose RabbitMQ as our message broker.

Let's answer it RabbitMQ is a popular message broker that Celery uses to send and receive messages between the client and the worker. But why RabbitMQ and not other brokers like Redis or Kafka?

- RabbitMQ is highly reliable and ensures your tasks are never lost, even if the server crashes.

- RabbitMQ has a web-based management interface that makes monitoring and managing your message queues easy.

Let's Setup RabbitMQ.

we will use the docker image of RabbitMQ but before that, you should have Docker installed on your machine.

Pulling the Image

docker pull rabbitmq:3-managementThis command pulls the RabbitMQ image with the management plugin enabled, which provides a web-based UI for managing your RabbitMQ server.

Running a RabbitMQ Container

docker run -d --name rabbitmq -p 5672:5672 -p 15672:15672 rabbitmq:3-managementPort 5672 is for the RabbitMQ server, and 15672 is for the management UI.

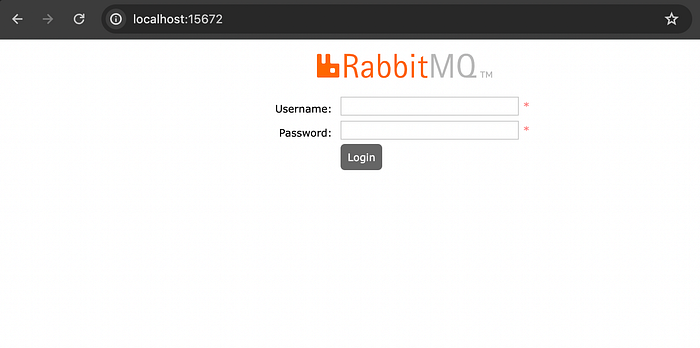

Access the RabbitMQ Management Console:

- Open a web browser and navigate to

http://localhost:15672/. - Log in with the default username

guestand passwordguest.

Let’s walk through a simple example of integrating Celery and RabbitMQ into a FastAPI application.

Before that, you need to install Dependencies

pip install fastapi

pip install celeryConfigure Celery

Here’s a basic Celery configuration with RabbitMQ as the message broker:

# celery_main.py

from celery import Celery

# Celery configuration with RabbitMQ as the broker

celery_app = Celery(

"celery_main",

broker="amqp://guest:guest@localhost:5672//",

backend="rpc://"

)

celery_app.conf.update(

result_expires=3600, # Results expire in one hour

)

@celery_app.task

def send_email_task(email: str, subject: str, body: str):

import time

time.sleep(5)

print(f"Email sent to {email} with subject {subject}")

return {"email": email, "subject": subject, "status": "sent"}Create the FastAPI Application

Next, we’ll create a FastAPI application that interacts with our Celery tasks:

# main.py

from fastapi import FastAPI

from celery.result import AsyncResult

from celery_main import send_email_task

app = FastAPI()

@app.post("/send-email/")

async def send_email(email: str, subject: str, body: str):

# Asynchronously send an email using Celery

task = send_email_task.delay(email, subject, body)

return {"task_id": task.id}

@app.get("/task-status/{task_id}")

async def get_task_status(task_id: str):

# Check the status of the Celery task

task_result = AsyncResult(task_id)

return {"task_id": task_id, "status": task_result.status, "result": task_result.result}Running the Application

To run the application, follow these steps:

- Start RabbitMQ on your system.

- Open a terminal and Start the Celery worker with the command:

celery -A celery_main.celery_app worker --loglevel=info3. Open a different terminal and Run the FastAPI app:

uvicorn main:app --reloadNow, when you send a request to /send-email/, the task will be processed asynchronously by Celery, and you can check the task’s status with /task-status/{task_id}.

If it is pending status you will get below response

{

"task_id": "629071be-b12e-45f1-a796-347a941b4a39",

"status": "PENDING",

"result": null

}Otherwise

{

"task_id": "418afe12-1e78-49cd-b335-59f189325be5",

"status": "SUCCESS",

"result": {

"email": "rajansahu713@gmail.com",

"subject": "<h1>Welcome Rajan!!<h1>",

"status": "sent"

}

}Conclusion

By integrating Celery with RabbitMQ in your FastAPI application, you can efficiently handle background tasks, making your application more responsive and scalable. Celery offers a range of powerful features that go beyond what FastAPI’s built-in background tasks provide, and RabbitMQ’s reliability ensures your tasks are processed safely.

Thanks for sticking around till the end! If you spot anything off or have some cool tips to share, drop them in the comments. Let’s learn together!

If this post tickled your brain, give that 👏 button so others may find it useful. And hey, don’t be a stranger! Follow me on GitHub and let’s connect on LinkedIn. Who knows? We might conquer the tech world together!